Langchain: Simplifying LLM-Powered Application Development¶

Author: Tobias Brenner

TL;DR¶

LangChain is a framework designed to simplify the creation of applications using large language models (LLMs). It supports various use cases, including document analysis, chatbots, and code analysis, with integration for different language models like BERT and GPT. Available for JavaScript and Python, LangChain provides modules for agents, retrieval, models, chains, memory, and callbacks. The framework employs Retrieval Augmented Generation (RAG) for LLM applications, offering components like document loaders, text splitting, text embedding, and vector stores. LangChain also features agents that interact with LLMs to define sequences of actions, chains for composing sequences, and memory and callback functionalities for customization and monitoring. An example, RAG, illustrates the framework's use in retrieving data from an external knowledge base to enhance the accuracy of language models.

Introduction¶

LangChain stands out for its innovative framework that streamlines the development of applications leveraging large language models (LLMs). Covering a spectrum of functionalities, from document analysis to the creation of advanced chatbots and code analysis tools, LangChain is a versatile ally for developers. With compatibility for prominent language models such as BERT and GPT, this framework provides tailored tools and modules for seamless integration within both JavaScript and Python environments. Its modular structure not only simplifies development but also empowers developers to harness the full spectrum of LangChain's functionalities, offering a customizable and efficient approach to building sophisticated language model applications.

Modular Design: Models, Chains, Agents and more!¶

LangSmith: Observability and Testing¶

Langsmith ensures observability, monitoring, testing, and debugging in LangChain applications. It provides crucial insights into performance, aiding developers in maintaining robust functionality.

LangServe: Chains as RestAPI and Templates¶

Langserver acts as the backbone for executing chains via RESTful APIs and templates. It simplifies the orchestration of complex workflows, offering a user-friendly interface for managing templates and executing chains.

In the broader context, LangChain encompasses main modules contributing to its modular structure:

-

Models: LangChain supports various models, including LLMs, Chat Models, and specific message types such as HumanMessage, AIMessage, and SystemMessage. Models play a pivotal role in shaping the interaction with language models, and LangChain introduces additional components:

-

Prompt: LangChain provides model-agnostic templates for input, known as PromptTemplates. Typically, a prompt is a string or a list of messages that serve as input for the language model. The template includes instructions, few-shot examples, context, and questions tailored for a specific task.

-

Output Parsers: These components transform the output from language models into suitable formats such as JSON, XML, or CSV. Output parsers are essential for structuring and organizing the information generated by the language models, ensuring compatibility with downstream applications.

from langchain.prompts import PromptTemplate

prompt_template = PromptTemplate.from_template(

"Tell me a {adjective} joke about {content}."

)

prompt_template.format(adjective="funny", content="chickens")

- Chains: Sequences of calls orchestrated using LangChain Expression Language (LCEL), allowing developers to create customized workflows.

from langchain_openai import ChatOpenAI

from langchain_core.prompts import ChatPromptTemplate

from langchain_core.output_parsers import StrOutputParser

prompt = ChatPromptTemplate.from_template("Tell me a short joke about {topic}")

model = ChatOpenAI(model="gpt-3.5-turbo")

output_parser = StrOutputParser()

chain = prompt | model | output_parser

-

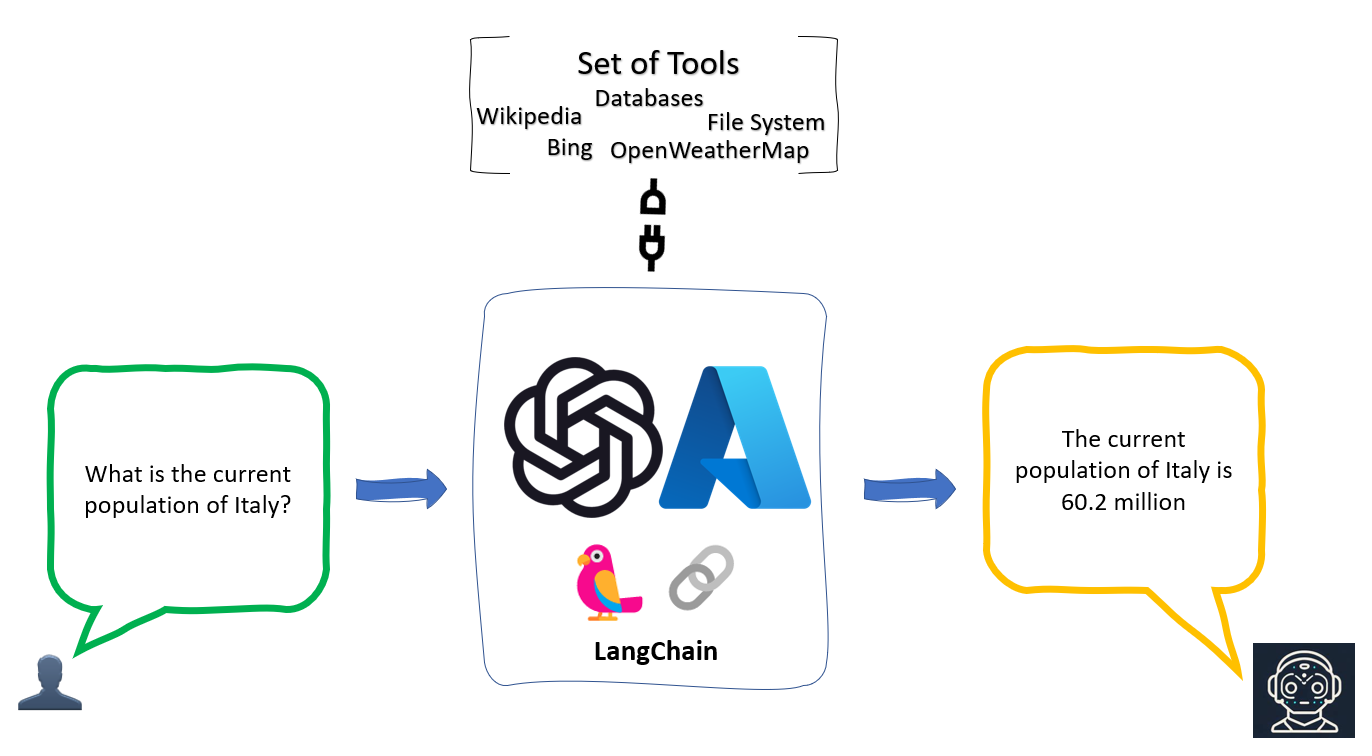

Agents: Intermediaries interacting with LLMs to define sequences of actions, with different agent types for varied scenarios.

-

Retrieval: LangChain streamlines the retrieval process through a systematic approach:

-

Load: Various document loaders (over 100 in LangChain) handle loading data from diverse sources, such as PDFs, CSVs, or the web.

-

Transform: The framework employs different transformations, including text splitting and chunking, to prepare and structure the loaded data for retrieval.

-

Embed: LangChain utilizes embeddings to capture the semantic meaning of texts, facilitating quick identification of similar texts. It offers a standardized interface for embedding.

-

Store: The embedded data is stored in vector stores, providing over 50 integration options like ChromaDB, Redis, Postgres, and ElasticSearch.

-

Retrieve: Once the data is in the store, LangChain offers various retriever algorithms, including Parent Document Retriever and Ensemble Retriever, enabling efficient retrieval of relevant information.

-

LCEL: Declarative language simplifying chain composition, enabling developers to create intricate workflows.

-

Memory: LangChain incorporates memory functionalities that allow developers to retain and recall information throughout the language model application's execution, enhancing the continuity and context-awareness of interactions.

-

Callbacks: Callbacks in LangChain provide hooks into various phases of language model application development, offering developers the flexibility to implement specific methods for logging, monitoring, streaming, and other custom tasks, tailoring the framework to their unique needs.

LangChain's modular design empowers developers to build sophisticated language model applications by offering specialized tools for observability, chain execution, and a set of core modules that address various aspects of language model interaction. This modular approach enhances flexibility, scalability, and overall developer experience, making LangChain a standout framework in the realm of large language model applications.

Comprehensive Model Support¶

Langchain excels in handling different model types, such as LLMs, Chat Models, and Messages. Its system standardizes input templates across models, providing consistency in interaction regardless of the underlying model complexity. Output parsers are capable of transforming the LLM outputs into useful formats like JSON, XML, or CSV, enhancing the practical application of LLM data.

Key Takeaways¶

- Langchain addresses the need for a unified framework in LLM-powered application development.

- With its modular design and comprehensive data retrieval capabilities, it represents a significant step towards intelligent and adaptable NLP tools.

- The framework's ability to provide model agnostic templates, along with pre-defined chains and callbacks, empowers developers to build with efficiency and precision.

- Langchain stands out by promoting an ecosystem that capitalizes on the strength of large language models, making it invaluable for developers in the field of AI and NLP.